1Westlake University , 2University of Tübingen, Tübingen AI Center

3Max Planck Institute for Informatics, Saarland Informatics Campus

4Max Planck Institute for Intelligent Systems

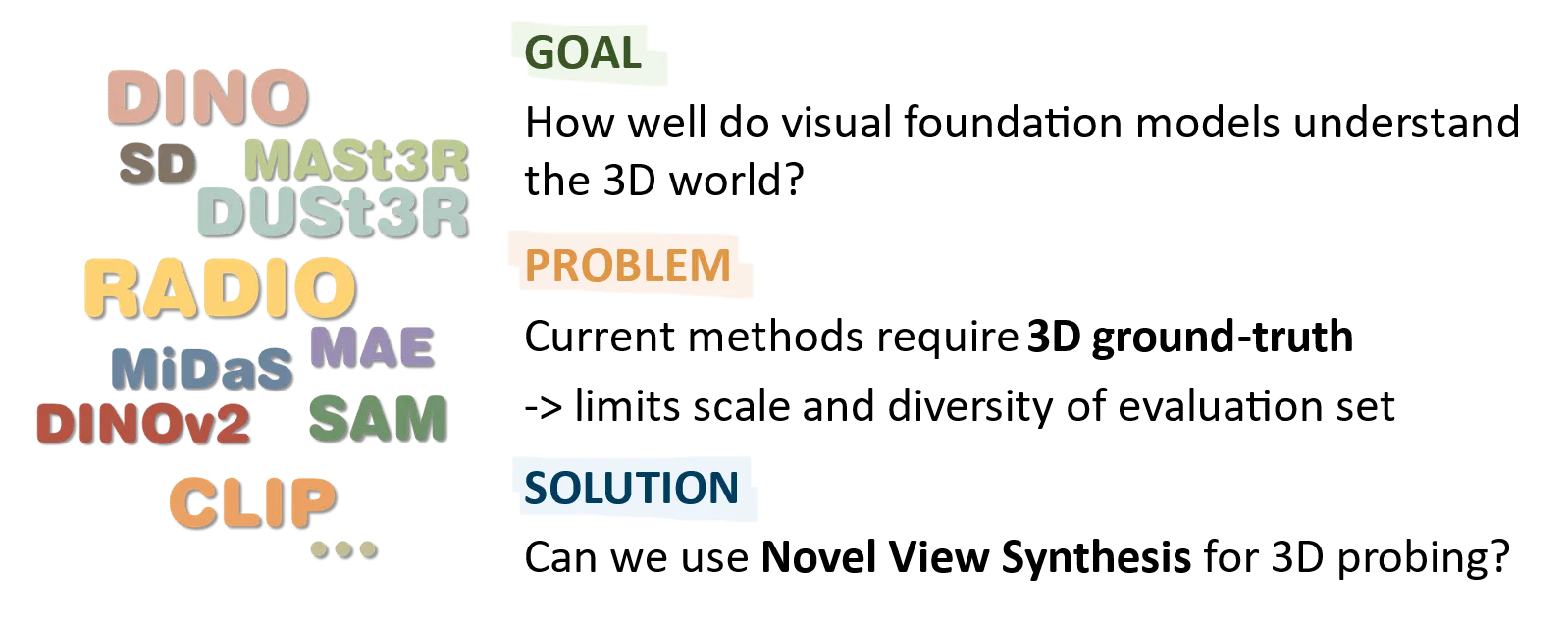

We present Feat2GS, a unified framework to probe “texture and geometry awareness” of visual foundation models. Novel view synthesis serves as an effective proxy for 3D evaluation.

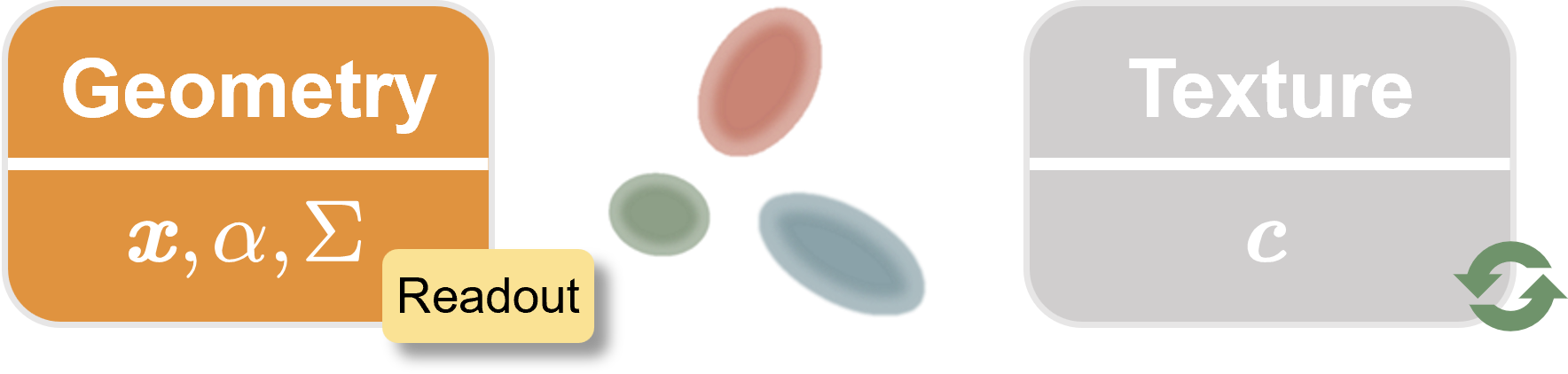

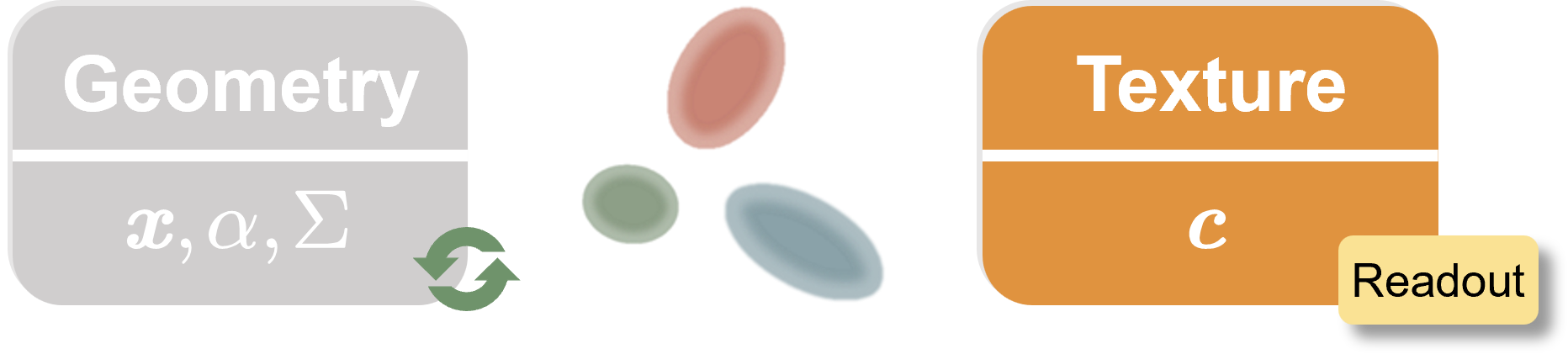

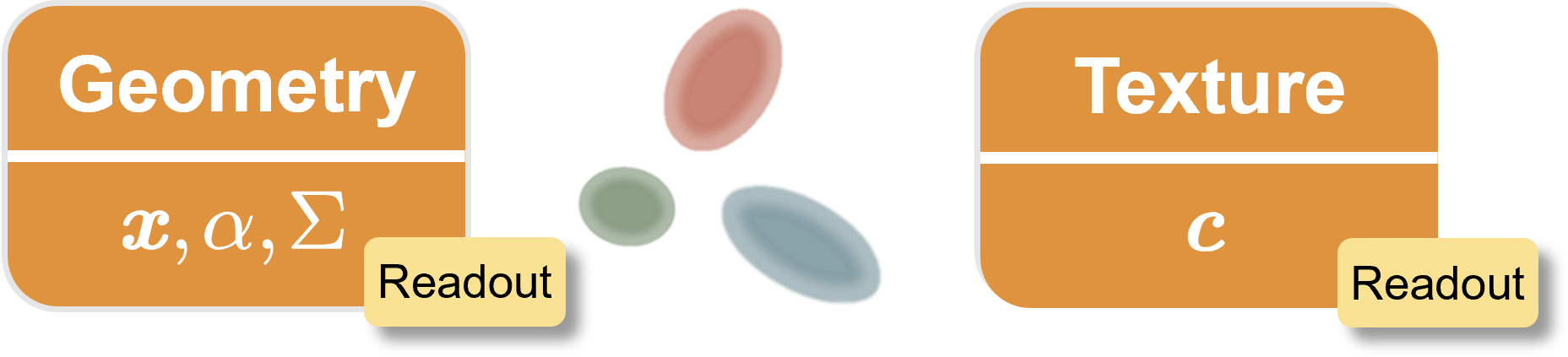

Casually captured photos are input into visual foundation models (VFMs) to extract features and into a stereo reconstructor to obtain relative poses. Pixel-wise features are transformed into 3D Gaussians (3DGS) using a lightweight readout layer trained with photometric loss. 3DGS parameters, grouped into Geometry and Texture, enable separate analysis of geometry/texture awareness in VFMs, evaluated by novel view synthesis (NVS) quality on diverse, unposed open-world images. We conduct extensive experiments to probe the 3D awareness of several VFMs, and investigate the ingredients that lead to a 3D aware VFM. Building on these findings, we develop several variants that achieve SOTA across diverse datasets. This makes Feat2GS useful for probing VFMs, and as a simple-yet-effective baseline for NVS.

We found 3D Metric and 2D Metric are well-aligned.

When probing geometry awareness, a 2-layer MLP reads out geometry parameters from 2D image features, while texture parameters are freely optimized.

When probing texture awareness, a 2-layer MLP reads out texture parameters from 2D image features, while geometry parameters are freely optimized.

When probing both geometry and texture, a 2-layer MLP simultaneously reads out all Gaussian parameters from 2D image features.

We thank Yuxuan Xue, Vladimir Guzov, Garvita Tiwari for their valuable feedback, and the members of Endless AI Lab and Real Virtual Humans for their help and discussions. This work is funded by the Research Center for Industries of the Future (RCIF) at Westlake University, the Westlake Education Foundation, the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) - 409792180 (EmmyNoether Programme, project: Real Virtual Humans), and German Federal Ministry of Education and Research (BMBF): Tübingen AI Center, FKZ: 01IS18039A. Yuliang Xiu also received funding from the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No.860768 (CLIPE project). Gerard Pons-Moll is a Professor at the University of Tübingen endowed by the Carl Zeiss Foundation, at the Department of Computer Science and a member of the Machine Learning Cluster of Excellence, EXC number 2064/1 – Project number 390727645.

@inproceedings{chen2025feat2gs,

title={Feat2gs: Probing visual foundation models with gaussian splatting},

author={Chen, Yue and Chen, Xingyu and Chen, Anpei and Pons-Moll, Gerard and Xiu, Yuliang},

booktitle={Proceedings of the Computer Vision and Pattern Recognition Conference},

pages={6348--6361},

year={2025}

}